Senin, 13 Januari 2025 (18:22)

Senin, 13 Januari 2025 (18:22)

Music |

Video |

Movies |

Chart |

Show |

|

PyTorch Masked Language Modeling | Transformers | Google Electra Model | NLP (Programming Datascience and Others) View |

|

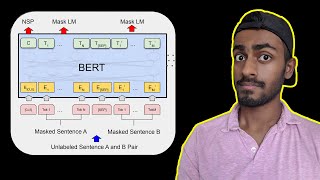

Masked Language Modeling (MLM) in BERT pretraining explained (Data Science in your pocket) View |

|

ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators | NLP Journal Club (The NLP Lab) View |

|

ELECTRA | Lecture 57 (Part 4) | Applied Deep Learning (Supplementary) (Maziar Raissi) View |

|

ELECTRA: Pre-Training Text Encoders as Discriminators Rather than Generators (Connor Shorten) View |

|

ELECTRA: How to Train BERT 4x Cheaper (Vaclav Kosar) View |

|

10 minutes paper (episode 11); Electra: Pre-training Text Encoders as Discriminators (AIology) View |

|

Electra: Pre-training text encoders as discriminators rather than generators presented by Chong Cher (Preferred.AI) View |

|

Transformers, explained: Understand the model behind GPT, BERT, and T5 (Google Cloud Tech) View |

|

BERT Neural Network - EXPLAINED! (CodeEmporium) View |